An introduction to the analytics and AI features of Reveal 11.

Non-Audio Video Tutorial

Reveal 11 includes a number of data analytics and AI enabled features that can help expedite eDiscovery and investigative workflows while enabling users to make more informed decisions faster.

Here is a list of key analytics and AI features:

| Category | Feature | Description |

| Analytics | Cluster Wheel |

Reveal utilizes advanced AI to group lexically similar documents together in virtual clusters. These clusters are then organized and presented on an interactive data visualization. Users can leverage this visualization to explore data at a zero state or analyze connections between topics, people, and other metadata. |

| Analytics | Dashboard | This interactive data visualizations helps users apply single click metadata filters to a data set. Users can then analyze the filter results. |

| Analytics | Concept Search | Users can execute a Concept Search as a single term, phrase, or paragraph. Reveal 11 identifies the related concepts and presents them to the user for analysis. This type of search can uncover hidden connections that exist between terms or reveal the unknown terms or phrases that may be important in the context of a legal matter. |

| AI | Classifiers & AI Models | Classifiers are designed to teach machines to categorize documents based on manually coded examples. Classifiers produce AI Models which generate Predictive Scores for documents within your project. These scores are used to prioritize documents for attorney review. Predictive Scores can help users determine whether a document is relevant / not relevant or privileged / not privileged. |

| AI | AI Tags | AI Tags are like review tags with one exception. AI Tags automatically create a Classifier. A users coding decisions from the AI Tag are then used to train the machine. |

| AI | AI Driven Batches | AI Driven Batches are small groups of documents that are set aside for subject matter expert review. Reveal automatically selects the best documents for training the Classifier. Documents from AI Driven Batches are the coded examples used to train Classifiers. |

| AI | Transcription | This AI powered feature allows users to transcribe audio and video files to text. |

| AI | Image Labeling | This AI powered feature allows users to automatically categorize images using text labels. |

| AI | Translation | This AI powered featured can be used to translate over 25,000 different business language combinations. |

AI Tags

AI Tags are similar to regular coding AI Tags automatically create a Classifier. A users coding decisions from the AI Tag are then used to train the machine.

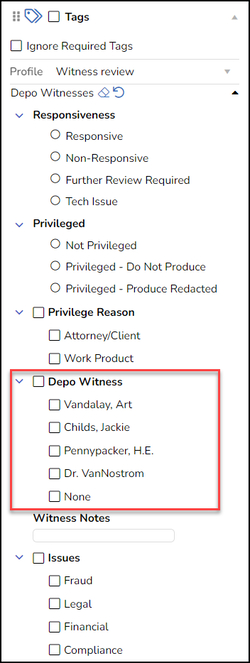

Classifiers are created by setting Prediction Enabled when creating or updating tags and their choices; the screen where this is selected is shown below. When administrative users create the AI Tag, Reveal will automatically create a new Classifier to train the machine on how to score documents (with scores between 0 and 100).

- For mutually exclusive tags (represented as radio buttons in the tag pane), the system will create one Classifier and use the “positive” and “negative” choices to train the Classifier.

- For multi-select tags (represented as check boxes in the tag pane), the system will create a Classifier for each choice. For example, if you have a multi-select tag called “Fraud”, you can have tag choices such as “Compliance”, “Financial”, “Legal”, etc. There would be a Classifier created for each of these choices. The classifiers for the above example can be seen in this screenshot of the Supervised Learning Classifiers screen.

-1.png?quality=high&width=655&name=35%20-%2001%20-%20Classifiers%20Screen%20(no%20Stability)-1.png)

Once created, coding documents with these tags will teach the system how to calculate scores for all documents in the project dataset, and help to focus review assignments, clustering, reporting, and other aspects of project data.

This is what the classifiers shown above look like in a sample Document Review screen tag pane; in the example below, the Responsive and Non-Responsive choices for Responsiveness are also AI tags, with the other two choices only there to inform the project manager of issues in the document's status.

For further details on AI Tags see How to Add AI Model to Library.

AI Batches

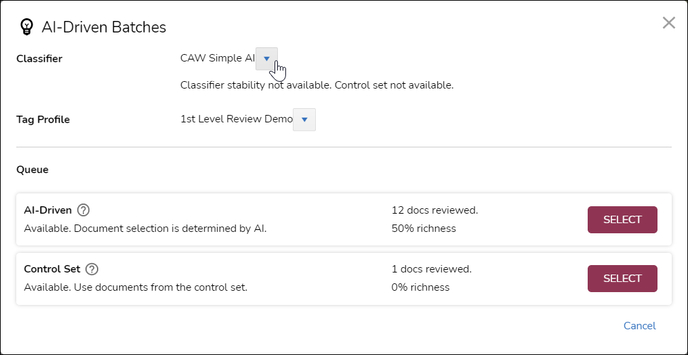

Reveal 11 has a tool for creating batches from a selected AI classifier model and a selected tag profile conveniently located in the Dashboard toolbar. AI-Driven Batches makes it simple to draw sample documents for supervised learning or control set review.

- From the Dashboard, click AI-Driven Batches.

- Select the Classifier model to be used in selecting documents. The stability status and availability of a control set for any classifier selected will be displayed below the selection.

- Select the Tag Profile from your list of available profiles; you may well only have one at a time.

- Queue will display batching and assignment status once a choice is selected.

- AI-Driven explains Document selection is determined by AI for creation of a sample set of documents for batching and supervised learning using tags by clicking Select.

- Control Set generates a set for control coding by clicking Select.

- Once you click Select, a message will appear in the Queue area notifying whether batch creation was successful.

- You will receive an email when an assignment folder has been generated for you using the documents selected by the classifier.

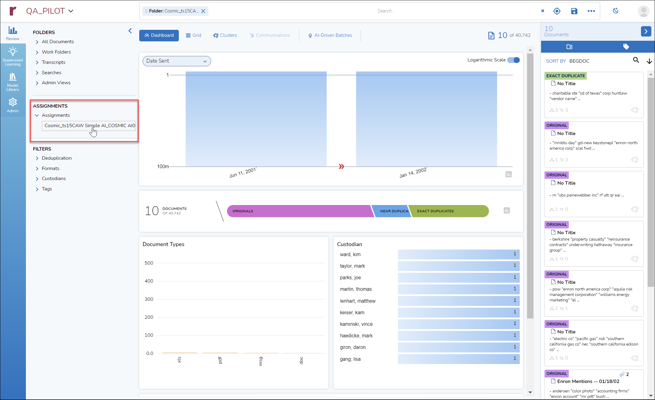

- A new folder of the AI-Driven Batch documents will appear under ASSIGNMENTS in your Sidebar. You may need refresh your browser for this to appear.

- You may now proceed to tag these documents to continue training the AI model.

AI Model Scoring

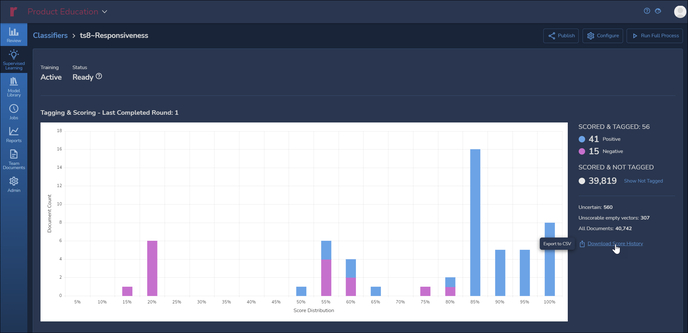

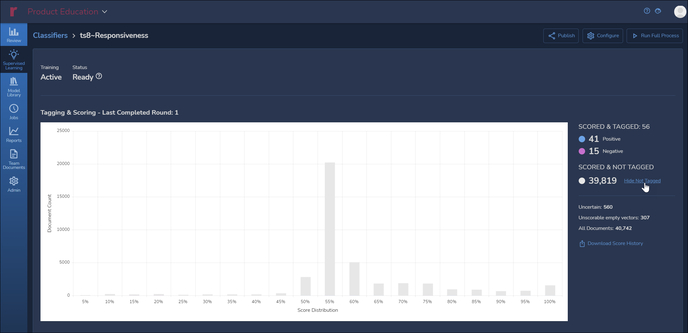

Each time an AI tag choice is selected, the classifier updates an AI Model. The AI Model compiles the coding with algorithmic analysis of the document language to generate scoring of documents under review. Here is an example of a tagging and scoring graph under a classifier's View Details screen in Supervised Learning (shown in the optional Dark Mode).

This graph shows actual tagging for this classifier, broken out as document counts of Positive and Negative reviewer assessments of Responsiveness as compared with the related AI Model's assessment of likely responsiveness. By toggling Show Not Tagged under SCORED & NOT TAGGED, a project manager can see at a glance how user coding compares with the model's prediction.

NOTE that negative tagging is extremely important in training AI models, in that these help define document language that fails to address the subject matter of this tag, which is Responsiveness.

Below that is the Control Set chart with numbers to the right displaying Precision, Recall, F1 and Richness for the set Score Threshold. As the AI model is trained by the classifier, this will display the percentage of documents identified as relevant for accuracy (Precision), inclusiveness (Recall) and quantity of desirable attributes (Richness), which are calculated into a summary score (F1). The slider below the graph allows the user to see the number of documents required to achieve a desired level of Precision balanced against Recall. -1.png?quality=high&width=688&name=35%20-%2006%20-%20View%20Classifier%20Details%2002%20(Control%20Set%20-%20rmvStability)-1.png)

For further details on evaluating AI Tags see How to Evaluate an AI Model.

Last Updated 1/19/2023