This article provides both visual and written instructions for evaluating the performance of an AI model in scoring document content.

Non-Audio Video Tutorial

AI models are used by Reveal to score documents based upon decisions by reviewers in coding Prediction Enabled tags (referred to as Supervised Learning) or by reference to published AI Model Library classifiers added to a project. Reveal can use these models to score documents automatically to assign documents and to compare, report, and update its calculations based upon Supervised Learning (a process called Active Learning).

This article will address the process of evaluating an AI Model.

- With a project open, select Supervised Learning from the Navigation Pane.

- The Classifiers screen will open, displaying a card for each Classifier in the current project. Users have the option to toggle Light and Dark Mode, shown here.

-4.png?quality=high&width=655&height=235&name=31%20-%2001%20-%20Classifiers%20Screen%20in%20Dark%20Mode%20(no%20button)-4.png)

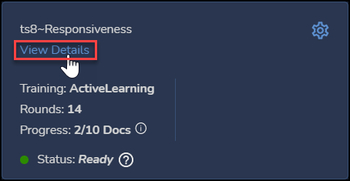

- To examine the status of a current model, click on View Details on the Classifier card below its title.

- The details window for the selected classifier will open. The initial view will be of the Tagging & Scoring window, as selected in the left-side Classifier Details navigation pane under PROGRESS. The Not Tagged scores – the AI assessment of likely classifier scoring distribution – opens first.

-

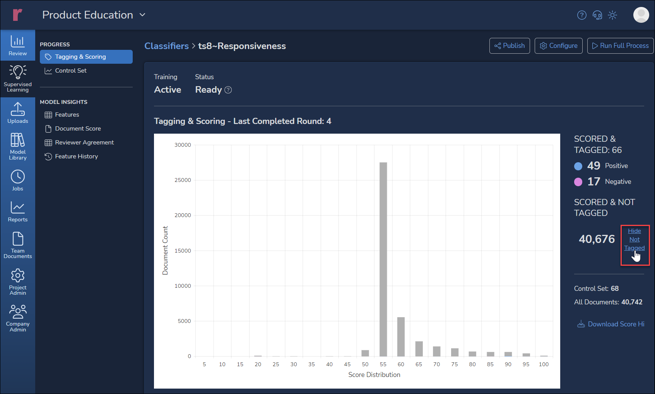

To see tagging activity, click Hide Not Tagged to the side of the bar graph.

- The above image shows a graph of Tagging & Scoring results. This graph shows actual tagging for this classifier, broken out as document counts of Positive and Negative reviewer assessments of Responsiveness as compared with the related AI Model's assessment of likely responsiveness. As of 4 rounds in which 66 documents have been tagged so far, 49 were tagged Positive and 17 Negative. In this way, a project manager can see at a glance how user coding compares with the model's prediction.

NOTE that negative tagging is extremely important in training AI models, in that these help to define document language that fails to address the subject matter of this tag, which is Responsiveness. - Further training will help to stabilize the classifier and reduce the number of Uncertain documents. Reveal will include Uncertain documents in batching to gain a better understanding of the factors involved in scoring for this classifier.

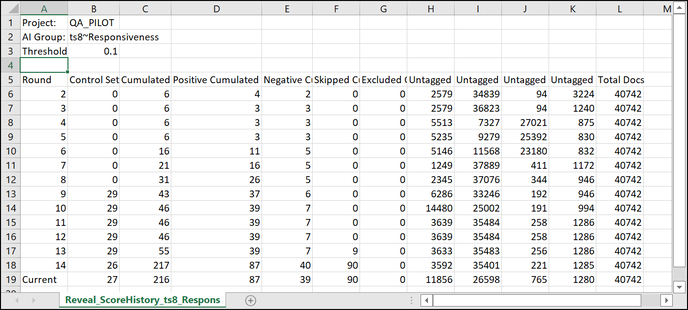

- You may click the link to the right of the graph to Download Score History, which will export the numbers as a table to CSV, as in the example below.

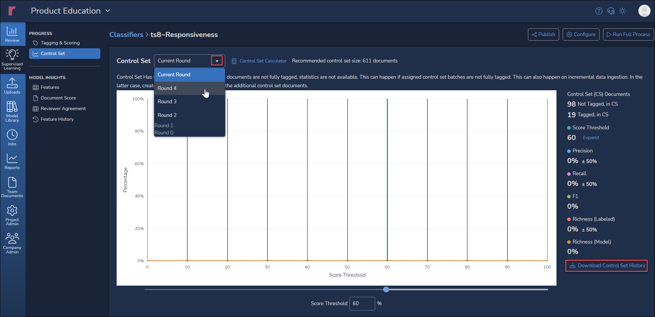

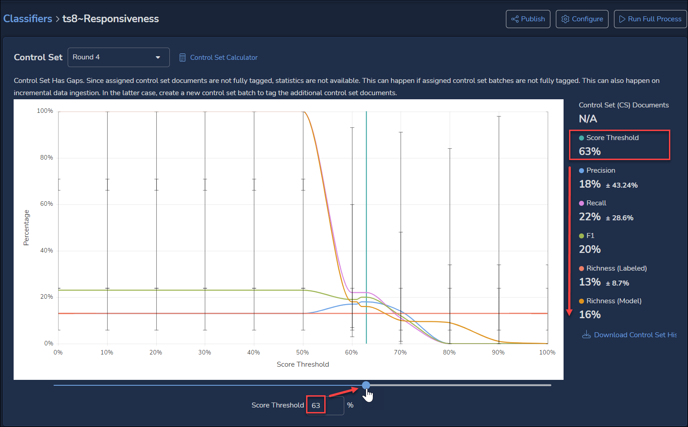

- The next screen available under Classifier Details PROGRESS navigation shows the Control Set activity for the Current Round and for prior rounds. A Control Set is a sample or set of samples used to weigh and verify the project’s AI analytics.

- Details for the graph, including Documents to Review, are again presented at the right.

-3.png?quality=high&width=622&height=374&name=31%20-%2005a%20-%20Classifier%20Details%20-%20Control%20Set%20(prior%20round)-3.png)

- The graph may be adjusted to set a score threshold for different document counts using the slider indicated by the arrow in the above illustration. The numeric Score Threshold entered or the slider may be adjusted to a precision of 1% (rather than the 10% bars in the graph); reported values will be updated accordingly.

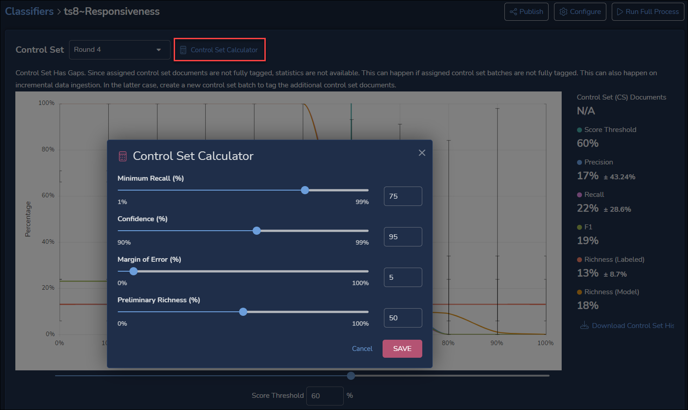

- A basic Control Set Calculator introduced in Reveal 11.9 recommends the number of documents needed for a Control Set to address the stated parameters (using the sliders or numeric values) for Minimum Recall, Confidence, Margin of Error, and Preliminary Richness.

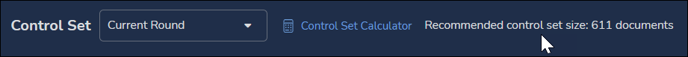

When you SAVE the above settings, this recommendation appears:

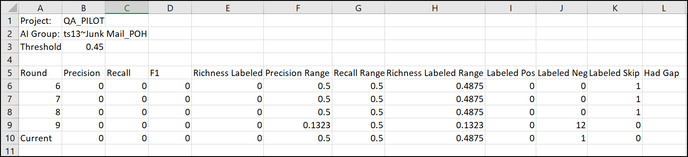

- At the bottom of the details is a link to Download Control Set History, which will export the numbers as a table to CSV, as in the example below.

- Details for the graph, including Documents to Review, are again presented at the right.

- Details on the factors underlying Reveal’s analytics may be viewed under the MODEL INSIGHTS section.

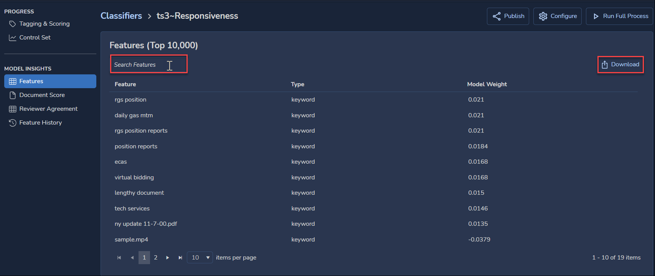

- Features reports keywords, entities and other values that have contributed to the current classifier’s model and the weight accorded each feature. This report can be filtered by Feature name, and may be sorted by any column by clicking on the column heading, toggling ascending or descending; anything other than default descending sort on Model Weight is indicated by a red arrow next to the column heading. You may download a copy of the report as a CSV (subject to checking Run Feature & Score Reports under the classifier’s Advanced Settings), which will not be limited to the 10,000 feature items displayed for performance.

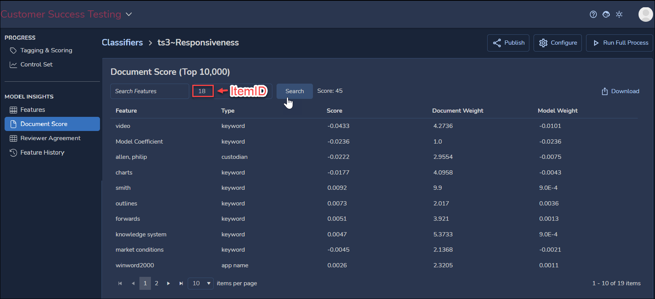

- Document Score applies MODEL INSIGHTS to an individual document selected using its ItemID control number. An overall score for the selected document is shown next to the Search button. You may search (filter) Features, download a CSV of the report (subject to checking Run Feature & Score Reports under the classifier’s Advanced Settings) that is not restricted to the top 10,000 items displayed for performance, or sort by any column heading, here expanded to include the Score for the feature term and its Document Weight as well as its Model Weight.

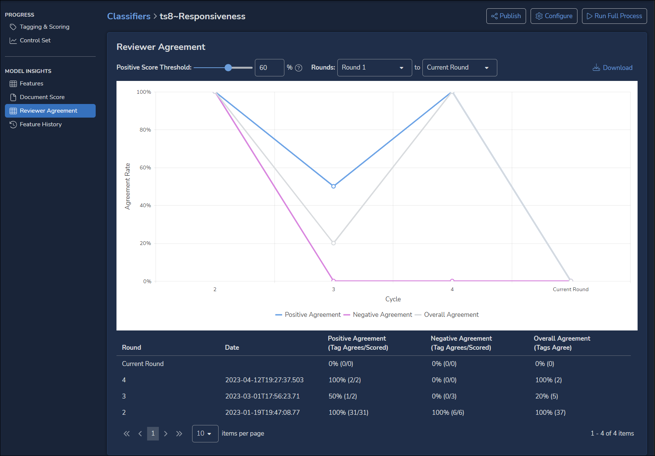

- Reviewer Agreement shows for each training round how often classifier and human reviewers agreed. Positive, Negative and Overall agreement rates are charted as percentages for each cycle. The Positive Score Threshold may be set higher than the 60% shown in the illustration below, and a later round may be selected as a starting point.

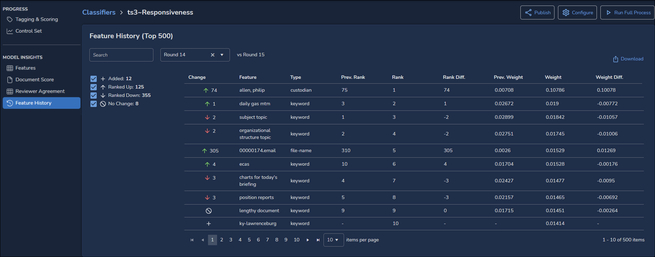

- Feature History compares the top-ranking 500 features from the latest round with how they ranked in a previous round. The table may be downloaded to CSV format.

The features are sorted by Rank, with columns showing Change, Type, Previous Rank, Rank Difference, Previous Weight, Weight, and Weight Difference. Several factors are selectable, with a summary count provided for each:

- Added

- Ranked Up

- Ranked Down

- No Change

This allows the user to evaluate how further AI tagging has affected the model’s analysis.

NOTE: This report will be available only for training rounds closed following upgrade to Reveal 11.8 and moving forward.

To exit the Classifier Details screen, go to the top and click Classifiers in the breadcrumb path.

For more information on using Classifiers, see Supervised Learning Overview.

Last Updated 9/20/2023